Activate your 30 day free trialto unlock unlimited reading.  A font provides the, Allows reading from and writing to a file in a random-access manner. Seems like we are done with our CustomProcessor (Github link to the repo is at the end of this article). Meet our team here and check out our open jobs on careers.getyourguide.com, GIVEAWAY ALERT Win an ultimate 48-hour Vatican experience for one lucky winner and a guest of their choice, Enter, Building A Career in UX and The Importance of Trusting our Instincts, Collaboration and Growth: 2022 Engineering Manager Summit, How the Coordination Team Keeps Recruitment Flowing. Instead of writing to a job queue for every request that updates the database, we insert an entry into another database table (called outbox) which contains a description of additional work that needs to be done (i.e. You can create both stateless or stateful transformers. I also didnt like the fact that Kafka Streams would create many internal topics that I didnt really need and that were always empty (possibly happened due to my silliness). Meaning, if you restart your application, it will re-read the topic from the beginning, and re-populate the state store (there are certain techniques that could help to optimize this process, but it is outside of the scope of this article), then it keeps both the state store and the kafka topic in sync.

A font provides the, Allows reading from and writing to a file in a random-access manner. Seems like we are done with our CustomProcessor (Github link to the repo is at the end of this article). Meet our team here and check out our open jobs on careers.getyourguide.com, GIVEAWAY ALERT Win an ultimate 48-hour Vatican experience for one lucky winner and a guest of their choice, Enter, Building A Career in UX and The Importance of Trusting our Instincts, Collaboration and Growth: 2022 Engineering Manager Summit, How the Coordination Team Keeps Recruitment Flowing. Instead of writing to a job queue for every request that updates the database, we insert an entry into another database table (called outbox) which contains a description of additional work that needs to be done (i.e. You can create both stateless or stateful transformers. I also didnt like the fact that Kafka Streams would create many internal topics that I didnt really need and that were always empty (possibly happened due to my silliness). Meaning, if you restart your application, it will re-read the topic from the beginning, and re-populate the state store (there are certain techniques that could help to optimize this process, but it is outside of the scope of this article), then it keeps both the state store and the kafka topic in sync.

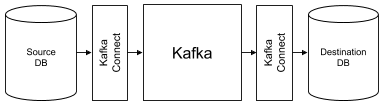

At Wingify, we have used Kafka across teams and projects, solving a vast array of use cases. Lets add another method called findAndFlushCandidates: When we call findAndFlushCandidates , it will iterate over our state store, check if the cap for a pair is reached, flush the pair using this.context.forward(key, value) call, and delete the pair from the state store. You are probably wondering where does the data sit and what is a state store.  It will be beneficial to both, people who work with Kafka Streams, and people who are integrating Kafka Streams with their Spring applications. `valFilter` is set to MN in the Spec class. This kind of buffering and deduplication is not always trivial to implement when using job queues. If it isn't, we add the key along with a timestamp e.g. Therefore, its quite expensive to retrieve and compute this in real time. Kafka Streams is a relatively young project that lacks many features that, for example, already exist in Apache Storm (not directly comparable, but oh well). Github: https://github.com/yeralin/custom-kafka-streams-transformer-demo. With an empty table, MySQL effectively locks the entire index, so every concurrent transaction has to wait for that lock.We got rid of this kind of locking by lowering the transaction isolation level from MySQL's default of REPEATABLE READ to READ COMMITTED. Cons: you will have to sacrifice some space on kafka brokers side and some networking traffic.

It will be beneficial to both, people who work with Kafka Streams, and people who are integrating Kafka Streams with their Spring applications. `valFilter` is set to MN in the Spec class. This kind of buffering and deduplication is not always trivial to implement when using job queues. If it isn't, we add the key along with a timestamp e.g. Therefore, its quite expensive to retrieve and compute this in real time. Kafka Streams is a relatively young project that lacks many features that, for example, already exist in Apache Storm (not directly comparable, but oh well). Github: https://github.com/yeralin/custom-kafka-streams-transformer-demo. With an empty table, MySQL effectively locks the entire index, so every concurrent transaction has to wait for that lock.We got rid of this kind of locking by lowering the transaction isolation level from MySQL's default of REPEATABLE READ to READ COMMITTED. Cons: you will have to sacrifice some space on kafka brokers side and some networking traffic.

Also, we expect the updates to be in near real-time. You might also be interested in: Tackling business complexity with strategic domain driven design. But what about scalability? It will aggregate them as a:6 , b:9 , c:9 , then since b and c reached the cap, it will flush them down the stream from our transformer. VisitorProcessor implements the init(), transform() and punctuate() methods of the Transformer and Punctuator interface. Datetime formatting i, [], String> uppercasedAndAnonymized = input, , edgesGroupedBySource.queryableStoreName(), localworkSetStoreName). SlideShare uses cookies to improve functionality and performance, and to provide you with relevant advertising. Before we begin going through the Kafka Streams Transformation examples, Id recommend viewing the following short screencast where I demonstrate how to runthe Scala source code examples in IntelliJ.

Then we have our service's Kafka consumer(s) work off that topic and update the cache entries. Free access to premium services like Tuneln, Mubi and more.  Bravo Six, Going Realtime. You can find the complete working code here. Transformer, the state is obtained via the I think we are done here! This blog post is an account of the issues we faced while working on the Kafka Streams based solution and how we were able found a way around them. 6 Benefits of Investing in Custom Software for Your Business, RFM NAV Customer Classification with Python and Azure Functions, Module 1 Final Project (Movie Industry Analysis).

Bravo Six, Going Realtime. You can find the complete working code here. Transformer, the state is obtained via the I think we are done here! This blog post is an account of the issues we faced while working on the Kafka Streams based solution and how we were able found a way around them. 6 Benefits of Investing in Custom Software for Your Business, RFM NAV Customer Classification with Python and Azure Functions, Module 1 Final Project (Movie Industry Analysis).

KeyValueMapper is applied, Perform an action on each record of KStream.

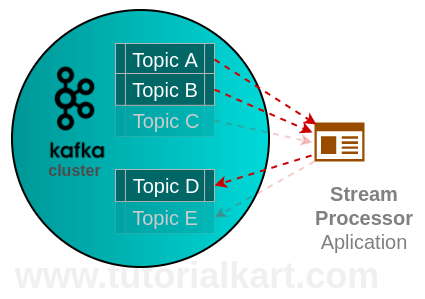

Visitor Java class represents the input Kafka message and has JSON representation : VisitorAggregated Java class is used to batch the updates and has the JSON representation : The snippet below describes the code for the approach. With few load test runs, we observed certain areas of concern. As previously mentioned, stateful transformations depend on maintainingthe state of the processing. Clipping is a handy way to collect important slides you want to go back to later. Using Kafka as a Database For Real-Time Transaction Processing | Chad Preisle ETL as a Platform: Pandora Plays Nicely Everywhere with Real-Time Data Pipelines. Kafka Streams Transformations provide the ability to perform actions on Kafka Streams such as filtering and updating values in the stream. The topic names, Group the records by their current key into a KGroupedStream while preserving The state store will be created before we initialize our CustomProcessor , all we need is to pass stateStoreName inside it during initialization (more about it later).

Before we go into the source code examples, lets cover a little background and also a screencast of running through the examples. Hence, they are stored on the Kafka broker, not inside our service. Here we simply create a new key, value pair with the same key, but an updated value.

We needed something above what the Kafka Streams DSL operators offered. Copyright Wingify. 1. and have similarities to functional combinators found in languages such as Scala.

Instant access to millions of ebooks, audiobooks, magazines, podcasts and more. See our Privacy Policy and User Agreement for details. Consistency: We want to guarantee that if our data is updated, its cached representation would also be updated. Using state stores and Processor API, we were able to batch updates in a predictable and time-bound manner without the overhead of a repartition. the given predicate. Dr. Benedikt Linse. Because I can!).

Transform the value of each input record into a new value (with possible new All of this happens independently of the request that modified the database, keeping those requests resilient. Furthermore, via In case updates to the key-value store have to be persisted, enabling disk, A background thread listens for the termination signal and ensures a graceful shutdown for the Kafka streams application via. Well, I didnt tell you a whole story. Streaming all over the World Lets also pass our countercap while we are at it: The transform method will be receiving key-value pairs that we will need to aggregate (in our case value will be messages from the earlier example aaabbb , bbbccc , bbbccc , cccaaa): We will have to split them into characters (unfortunately there is no character (de)serializer, so I have to store them as one character strings), aggregate them, and put them into a state store: Pretty simple, right? The Adaptive MACDCoding Technical Indicators. To process the inserts to the outbox table, we use Debezium, which follows the MySQL binlog and writes any new entries to a Kafka topic. a state that is available beyond a single call of transform(Object, Object). How can we guarantee this when the database and our job queue can fail independently of each other? KeyValue type in Stateful transformations, on the other hand, perform a round-trip to kafka broker(s) to persist data transformations as they flow. Lets create a class CustomProcessor that will implement a Transformer

We also need a map holding the value associated with each key (a KeyValueStore). five minutes in the future and also store that record's value in our map. Stateless transformations do not require state for processing. In one of our earlier blog posts, we discussed how the windowing and aggregation features of Kafka Streams allowed us to aggregate events in a time interval and reduce update operations on a database. data is not sent (roundtriped)to any internal Kafka topic. Nevertheless, with an application having nearly the same architecture in production working well, we began working on a solution.

Transforming records might result in an internal data redistribution if a key based operator (like an aggregation Ill try to post more interesting stuff Im working on.

This ensures we only output at most one record for each key in any five-minute period. GetYourGuide is the booking platform for unforgettable travel experiences. computes zero or more output records. However, a significant deviation with the Session Recordings feature was the size of the payload and latency requirements. Building Large-Scale Stream Infrastructures Across Multiple Data Centers with Changing landscapes in data integration - Kafka Connect for near real-time da Real-time Data Ingestion from Kafka to ClickHouse with Deterministic Re-tries How Zillow Unlocked Kafka to 50 Teams in 8 months | Shahar Cizer Kobrinsky, Z Running Kafka On Kubernetes With Strimzi For Real-Time Streaming Applications. You can flush key-value pairs in two ways: by using previously mentioned this.context.forward(key, value) call or by returning the pair in transform method. Here is a caveat that you might understand only after working with Kafka Streams for a while. For our use case we need two state stores. Developers refer to the processor API when Apache Kafka Streams toolbox doesnt have a right tool for their needs OR they need better control over their data. Resources for Data Engineers and Data Architects. APIdays Paris 2019 - Innovation @ scale, APIs as Digital Factories' New Machi Mammalian Brain Chemistry Explains Everything. The intention is to show creating multiple new records for each input record. The Transformer interface is for stateful mapping of an input record to zero, one, or multiple new output records (both key and value type can be altered arbitrarily). Also, related to stateful Kafka Streams joins, you may wish to check out the previous Kafka Streams joins post. The number of events for that customer exceeded a certain threshold. We also want to test it, right? We need to buffer and deduplicate pending cache updates for a certain time to reduce the number of expensive database queries and computations our system makes. Kafka Streams transformations contain operations such as `filter`, `map`, `flatMap`, etc. In this example, we use the passed in filter based on values in the KStream. After some research, we came across the Processor API. For example, lets imagine you wish to filter a stream for all keys starting with a particular string in a stream processor. 3. The latter is the default in most other databases and is commonly recommended as the default for Spring services anyway. Let me know if you want some stateful examples in a later post. To populate the outbox table, we created a Hibernate event listener that notes which relevant entities got modified in the current transaction. org.apache.kafka.streams.processor.Punctuator#punctuate(long) the processing progress can be observed and additional Here is the method that it calls: Now we instantiate the transformer and set up some Java beans in a configuration class using Spring Cloud Stream: The last step is to map these beans to input and output topics in a Spring properties file: We then scope this configuration class and properties to a specific Spring profile (same for the Kafka consumer), corresponding to a deployment which is separate from the one that serves web requests. The data for a single activity is sourced from over a dozen database tables, any of which might change from one second to the next, as our suppliers and staff modify and enter new information about our activities. You may also be interested in: How we built our modern ETL pipeline.

org.apache.kafka.streams.processor.Punctuator#punctuate(long), a schedule must be registered.