The lakeFS open source project for data lakes allows data versioning, rollback, debugging, testing in isolation, and more all in one. Digitizing machine data with an automated service management platform, Sensor-based automatic location, movement and state detection of concrete frameworks on building sites, Ensured durability and product quality with anomaly detection, Click to check out

The lakeFS open source project for data lakes allows data versioning, rollback, debugging, testing in isolation, and more all in one. Digitizing machine data with an automated service management platform, Sensor-based automatic location, movement and state detection of concrete frameworks on building sites, Ensured durability and product quality with anomaly detection, Click to check out This means that every time you visit this website you will need to enable or disable cookies again. Deliver ultra-low-latency networking, applications, and services at the mobile operator edge. With 24/7 customer support, you can contact us to address any challenges that you face with your entire big data solution.

Reach your customers everywhere, on any device, with a single mobile app build.

Reach your customers everywhere, on any device, with a single mobile app build. Amazon extended its AWS service with AWS Data Lakes. A recent study showed HDInsight delivering 63% lower TCO than deploying Hadoop on premises over five years. Consider cross-training your data warehouse staff and analytics team in your data lake technology. Implementing a data lake requires a complete data analytics strategy coupled with proper data management and governance. Your Data Lake Store can store trillions of files where a single file can be greater than a petabyte in size which is 200x larger than other cloud stores. Through tailor made workshops we will help you find the right approach for your company.

It also lets you independently scale storage and compute, enabling more economic flexibility than traditional big data solutions. You already have a functional team in place, but you are short on manpower or specific experience? With lakeFS, your data lake is versioned and you can easily time-travel between consistent snapshots of the lake. Their closeness to the data and their understanding of the enterprise data model will serve you well in the data lake environment. According to a recent. Review your current analytics tools and consider upgrading them to handle the data lake. Get fully managed, single tenancy supercomputers with high-performance storage and no data movement. Delta Lake is supported by more than 190 developers from over 70 organizations across multiple repositories.Chat with fellow Delta Lake users and contributors, ask questions and share tips. Well, you found us! Queries are automatically optimized by moving processing close to the source data, without data movement, thereby maximizing performance and minimizing latency.

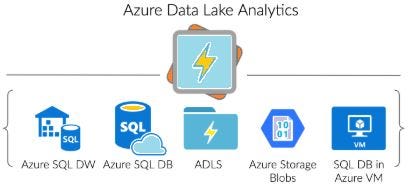

Finally, keep in mind that any major data-driven project will take time and resources. Each of these Big Data technologies as well as ISV applications are easily deployable as managed clusters, with enterprise level security and monitoring. Consider cross-training your. Data scientists and Data Engineers can easily access and process large volumes of data at high speed, providing them with the flexibility they need for different data analytics activities. With no limits to the size of data and the ability to run massively parallel analytics, you can now unlock value from all your unstructured, semi-structured and structured data. Oracle offers Oracle Big Data Services that include Hadoop-based data lakes and analysis through Oracle Cloud. Run your Windows workloads on the trusted cloud for Windows Server. Modernize operations to speed response rates, boost efficiency, and reduce costs, Transform customer experience, build trust, and optimize risk management, Build, quickly launch, and reliably scale your games across platforms, Implement remote government access, empower collaboration, and deliver secure services, Boost patient engagement, empower provider collaboration, and improve operations, Improve operational efficiencies, reduce costs, and generate new revenue opportunities, Create content nimbly, collaborate remotely, and deliver seamless customer experiences, Personalize customer experiences, empower your employees, and optimize supply chains, Get started easily, run lean, stay agile, and grow fast with Azure for startups, Accelerate mission impact, increase innovation, and optimize efficiencywith world-class security, Find reference architectures, example scenarios, and solutions for common workloads on Azure, We're in this togetherexplore Azure resources and tools to help you navigate COVID-19, Search from a rich catalog of more than 17,000 certified apps and services, Get the best value at every stage of your cloud journey, See which services offer free monthly amounts, Only pay for what you use, plus get free services, Explore special offers, benefits, and incentives, Estimate the costs for Azure products and services, Estimate your total cost of ownership and cost savings, Learn how to manage and optimize your cloud spend, Understand the value and economics of moving to Azure, Find, try, and buy trusted apps and services, Get up and running in the cloud with help from an experienced partner, Find the latest content, news, and guidance to lead customers to the cloud, Build, extend, and scale your apps on a trusted cloud platform, Reach more customerssell directly to over 4M users a month in the commercial marketplace, The first cloud analytics service where you can easily develop and run massively parallel data transformation and processing programs in U-SQL, R, Python, and .Net over petabytes of data. must react by shortening software development and app deployment times. The data lake only contains components that are needed for the specific use case of the client. Data is always encrypted; in motion using SSL, and at rest using service or user-managed HSM-backed keys in Azure Key Vault. Finally, you can meet security and regulatory compliance needs by auditing every access or configuration change to the system. Part of this transition involves choosing cloud service providers for a combination of database, software and analytics services. Consider initially limiting the amount and type of data stored in the data lake. Meaning, Working, Components, and Uses, To Sustainability and Beyond with Predictive Analytics, Kubernetes vs. Docker: Understanding Key Comparisons, What Is Kubernetes? Changes in the tools may be required depending upon changes in the types of data (unstructured, etc. Turn your ideas into applications faster using the right tools for the job. In 2018, Gartner published a white paper analyzing potential data lake failure scenarios. Microsoft extended its Azure cloud offering with Azure Data Lake Storage. warehouse staff and analytics team in your data lake technology. On June 22, Toolbox will become Spiceworks News & Insights, As business intelligence (BI) and analytics move off-premise to the cloud, organizations realize that enterprise data warehouses are unable to meet operational demands. Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings. In both cases no hardware, licenses, or service specific support agreements are required. Reduce infrastructure costs by moving your mainframe and midrange apps to Azure. Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. You will need qualified data science staff for both data storage and business analytics. Head over to the Spiceworks Community to find answers. 1070 Vienna, Copyright __YEAR__ craftworks | All Rights Reserved. The data lake is a single repository that includes raw data from source systems. If you disable this cookie, we will not be able to save your preferences. Bring together people, processes, and products to continuously deliver value to customers and coworkers. Begin your journey by investing in the following. Move your SQL Server databases to Azure with few or no application code changes. The shift stems from the fact that the on-premise data warehouse no longer serves the current needs. Optimize costs, operate confidently, and ship features faster by migrating your ASP.NET web apps to Azure. You can authorize users and groups with fine-grained POSIX-based ACLs for all data in the Store enabling role-based access controls. Together, the features of Delta Lake improve both the manageability and performance of working with data in cloud storage objects, and enable a lakehouse paradigm that combines the key features of data warehouses and data lakes: standard DBMS management functions usable against low-cost object stores. San Francisco was bustling with 5000+ data folks from around the world to attend the Data & What is lakeFS? Finally, because Data Lake is in Azure, you can connect to any data generated by applications or ingested by devices in Internet of Things (IoT) scenarios. Several vendors have complete data lake solutions. Connect modern applications with a comprehensive set of messaging services on Azure. Our data is transient and dealing with it is an inefficient and manual task. This includes not only files and databases but data sources from originating systems. Finally, keep in mind that any major data-driven project will take time and resources. For web site terms of use, trademark policy and other project polcies please see https://lfprojects.org. These scenarios included the following: Learn More: Top 4 Considerations for Choosing a Data Integration Tool for WFH World. As you move towards implementing your first data lake, it is still necessary to support mission-critical operational systems, including your data warehouse. It can include databases, structured files, semi-structured data (such as XML, JSON, and so forth) and unstructured data (such as sensor data, log files, audio and video). Data Lake is a key part of Cortana Intelligence, meaning that it works with Azure Synapse Analytics, Power BI, and Data Factory for a complete cloud big data and advanced analytics platform that helps you with everything from data preparation to doing interactive analytics on large-scale datasets. Thousands of companies are processing exabytes of data per month with Delta Lake. Tech Salaries in 2022: Why the Six Figure Pay Makes Techies Feel Underpaid, National System Administrators Appreciation Day: A SysAdmins Guide to Easier Workload, What Is Docker? Some tech managers consider the data lake to be their own analytics platform and ignore or underestimate their own data management and data modeling knowledge. Is it time for IT leaders to re-think analytics budgets, move away from the warehouse and invest in data lakes? Within the project, we make decisions based on these rules. Data growth across the enterprise can flood a data lake with old, outdated, irrelevant or unknown data. Meet environmental sustainability goals and accelerate conservation projects with IoT technologies. craftworks develops customized big data infrastructures and data lake solutions based on open source technologies either for on-premise solutions or in the cloud (Microsoft Azure). Data growth can flood a data lake and make it useless. Accelerate time to insights with an end-to-end cloud analytics solution. Delta Lake is an open-source storage framework that enables building a Lakehouse architecture with compute engines including Spark, PrestoDB, Flink, Trino, and Hive and APIs for Scala, Java, Rust, Ruby, and Python. Data Lake is a cost-effective solution to run big data workloads. The IBM solution is particularly interesting in its embrace of open source, following this new industry trend. Reduce fraud and accelerate verifications with immutable shared record keeping. Ensure that you have a complete and up-to-date enterprise data model that describes all of your data. Explore tools and resources for migrating open-source databases to Azure while reducing costs. This means that you dont have to rewrite code as you increase or decrease the size of the data stored or the amount of compute being spun up. Azure Managed Instance for Apache Cassandra, Azure Active Directory External Identities, Citrix Virtual Apps and Desktops for Azure, Low-code application development on Azure, Azure private multi-access edge compute (MEC), Azure public multi-access edge compute (MEC), Analyst reports, white papers, and e-books, Store and analyze petabyte-size files and trillions of objects, Develop massively parallel programs with simplicity, Debug and optimize your big data programs with ease, Enterprise-grade security, auditing, and support, Start in seconds, scale instantly, pay per job.